Together with practitioners the team developed practical recommendations for organizations (see below) and launched Managing AIWISEly — a new program designed to train professionals as AI Polymaths, equipped to co-produce data, co-explain, and co-deploy AI.

Collaborative AI: Practical tips for AI development

Avoid 3 ML Development Pitfalls with a Collaborative AI Approach

Pitfall 1: Treating domain experts as users instead of co-designers

🙅 Unproductive Question:

How can we get experts to trust and use our models?

🙌 Productive Question:

How can we involve experts and decision makers in deciding what our model should focus on from the beginning

Pitfall 2: Aiming to produce “unbiased” predictions that experts will follow

🙅 Unproductive Question:

How can we produce predictions that are better and less biased than those currently offered by experts?

🙌 Productive Question:

What new insights, not currently available to experts but helpful for their decisions, can we realistically offer?

Pitfall 3: Underestimating the collective effort required to organize and label data

🙅 Unproductive Question:

What goals do we want to optimize for and what datasets are available for building new models?

🙌 Productive Question:

What specific goal can we agree on together with experts and decision makers, so that we can focus our data sourcing and labeling efforts on the most critical parameters?

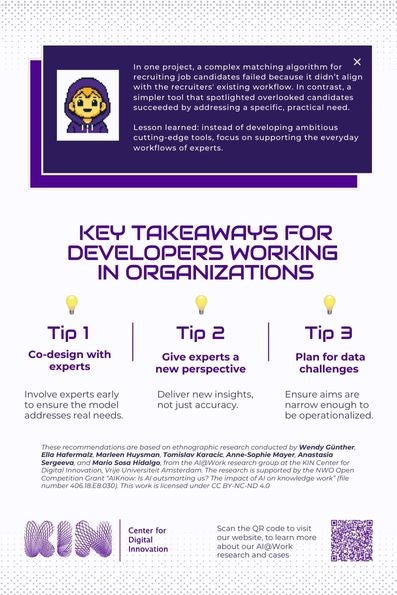

Key Takeaways for Developers Working in Organizations

Tip 1: Co-design with experts

💡Involve experts early to ensure the model addresses real needs.

Tip 2: Give experts a new perspective

💡 Deliver new insights, not just accuracy.

Tip 3: Plan for data challenges

💡 Ensure aims are narrow enough to be operationalized.

These recommendations are based on ethnographic research conducted by Wendy Günther, Ella Hafermalz, Marleen Huysman, Tomislav Karacic, Anne-Sophie Mayer, Anastasia Sergeeva, and Mario Sosa Hidalgo, from the AI@Work research group at the KIN Center for Digital Innovation, Vrije Universiteit Amsterdam. The research is supported by the NWO Open Competition Grant “AIKnow: Is AI outsmarting us? The impact of AI on knowledge work” (file number 406.18.E8.030). This work is licensed under CC BY-NC-ND 4.0

In one project, a complex matching algorithm for recruiting job candidates failed because it didn’t align with the recruiters' existing workflow. In contrast, a simpler tool that spotlighted overlooked candidates succeeded by addressing a specific, practical need. Lesson learned: instead of developing ambitious cutting-edge tools, focus on supporting the everyday workflows of experts.

How do organizations implement Artificial Intelligence?

Marleen Huysman talks the research group and introduces the book about machine learning application 'S.L.I.M. managen van AI in de praktijk: Hoe organisaties slimme technologie implementeren'

Want to know more?

Please do not hesitate to contact us